Yesterday, Y and I took the subway to Manhattan to watch the film Alien: Romulus on the IMAX screen at the AMC 14 on 34th Street.

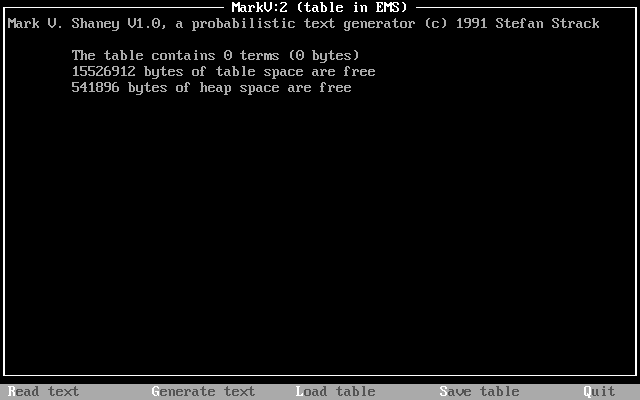

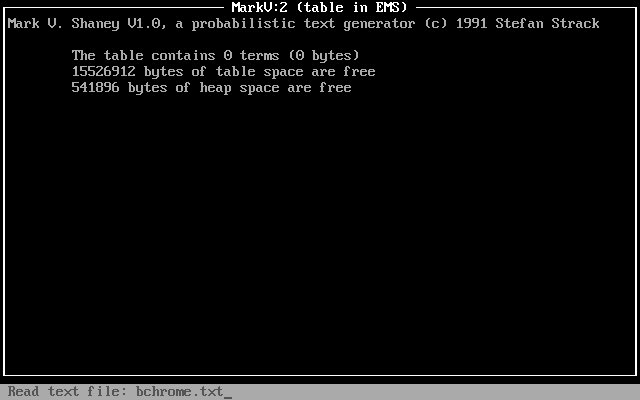

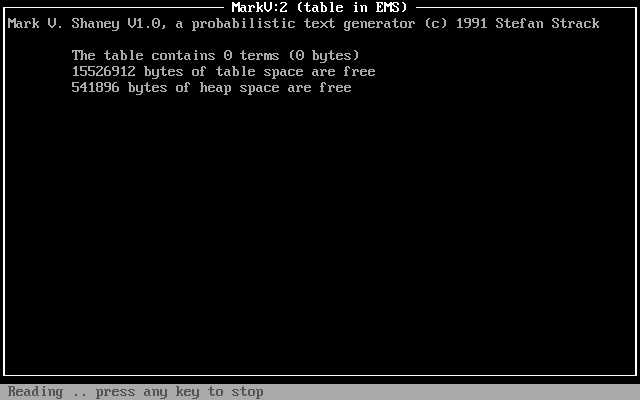

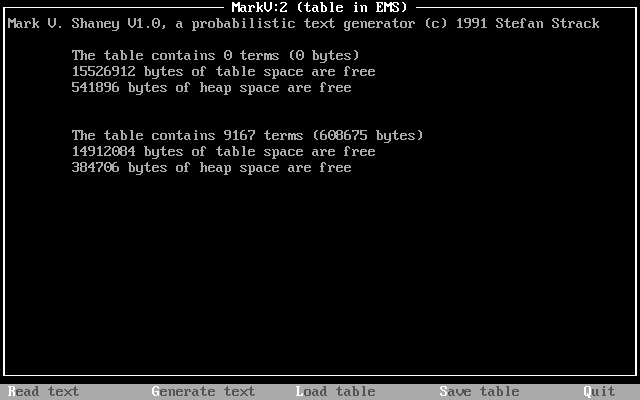

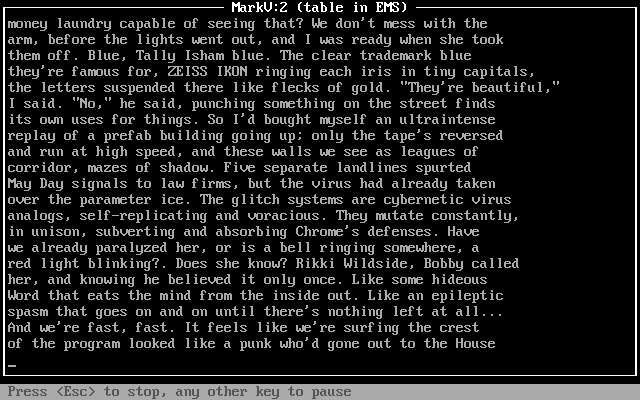

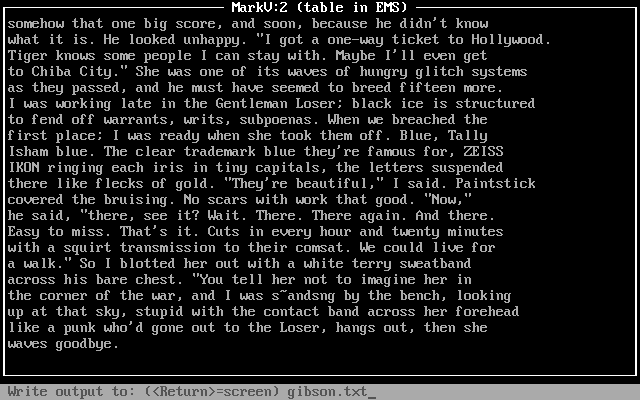

I thought that Alien: Romulus was an interesting story that threaded the needle of connecting the origin film Alien (1979) via the first Xenomorph we saw and the android Ash (Ian Holm) to Prometheus (2012) and Alien: Covenant (2017) via the black liquid (hints of the black oil from The X-Files) and the Engineers. The retrocomputers, ASCII text, and a computer with a 3.5″ floppy disk drive made it feel like the same world as Alien. I felt that some of the lines were corny, over-the-top, and unnecessary fan service, but overall, it was an interesting and sometimes exciting addition to the series.

Unrelated to the film per se, I have some thoughts instead about the technologies of presentation and communal engagement with the film.

First, movies shown in theaters, especially IMAX films, are shown with the volume far too loud. Y and I last went to an IMAX film over 10 years ago, but the memory of how that experience hurt both of our ears, we planned ahead and brought foam ear plugs. Even with our ear plugs, which work wonders at eliminating noise in other settings, were just barely up to the task of keeping the volume of the film presentation at tolerable levels. Let me put that another way: While wearing ear plugs, I was able to hear the film’s dialog and sound effects and music just fine and sometimes a little not fine when it got so loud as to overpower the ear plugs. That’s too damn loud. It was only after we were leaving that Y thought we should have checked the decibel levels. Hindsight is 20-20.

Second, I know to some I might sound like an old man yelling at kids to get off my lawn, but for those who have known me a long time, they know that I’ve been deadly serious about this since going to see films when I was a kid. That is we owe other theater goers our respect so that everyone can enjoy the film. Carrying on, talking, or using a phone during a movie can disturb others, so we shouldn’t do those things. Unfortunately, some of the other customers, who would have paid the same $30 per ticket we paid, don’t care for social norms and simple decency. It would be one thing if these were kids who didn’t know any better, but these were adults who acted like kids. Hell is other people, I suppose.

Considering these things, I prefer to stay at home to enjoy a film without ear plugs or annoying guests. Of course, I am assuming the neighbors don’t act the fool, which I’ve tried my best to address following these tips.