I decided to ride Amtrak back home after visiting my folks, because I dislike the indignity of traveling by air in the United States. I’ve had more than my fair share of “random bag checks,” and I disagree with the security theater at TSA checkpoints that does more to insult than protect.

While a train obviously takes longer than an airplane flight, it provides the individual with a dignified travel experience. You walk from the station to the tracks, board your train, and off you go.

I opted for a roomette aboard Amtrak’s Silver Meteor so that I could sleep more easily on the overnight train ride. The scheduled trip time was about 14 hours, but the actual trip time was closer to 17. For one-way travel, the cost was only a little more than a plane ticket.

Overall, I enjoyed the experience. Perhaps because the experience was new, I had trouble staying asleep. When I ride again, I might take a sleep aid like Melatonin to help with my sleep. Also, as others have remarked online, there were delays. My train’s delays meant that I missed the dinner service, and since the train was designated only with dinner and breakfast service, there was no lunch service despite arriving 3 hours late in NYC the next day. Thankfully, I had learned from other train travelers and came prepared with extra water and snacks to tide me over. However, I might pack an MRE for a meal next time to have something more substantial to eat if needed.

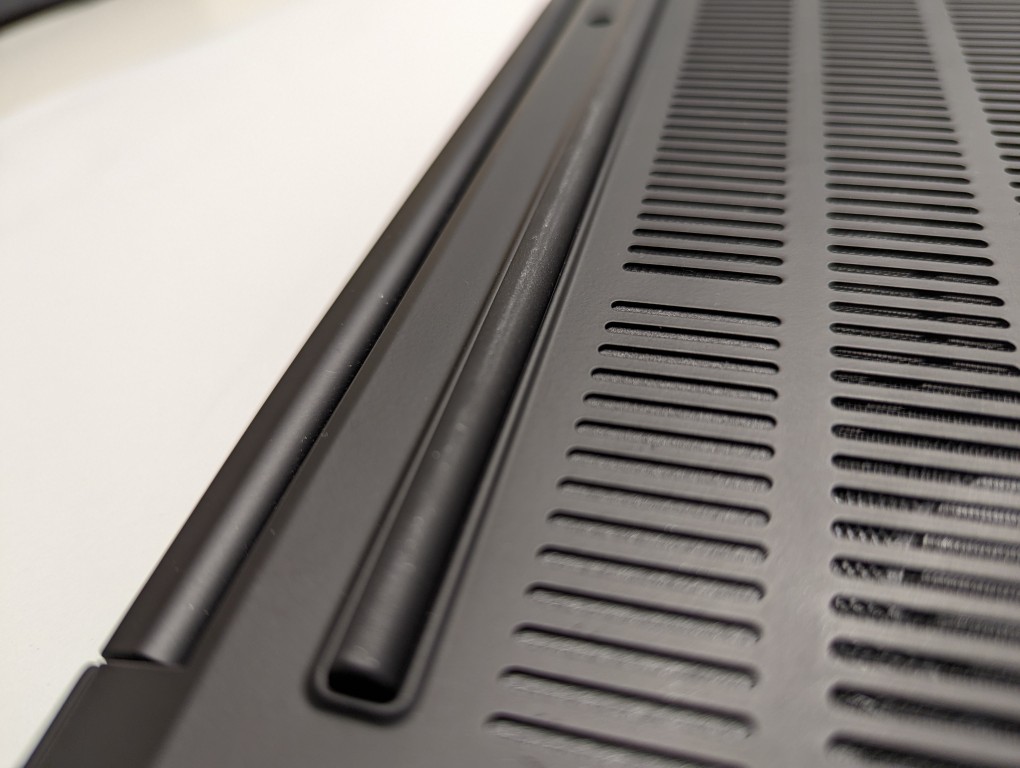

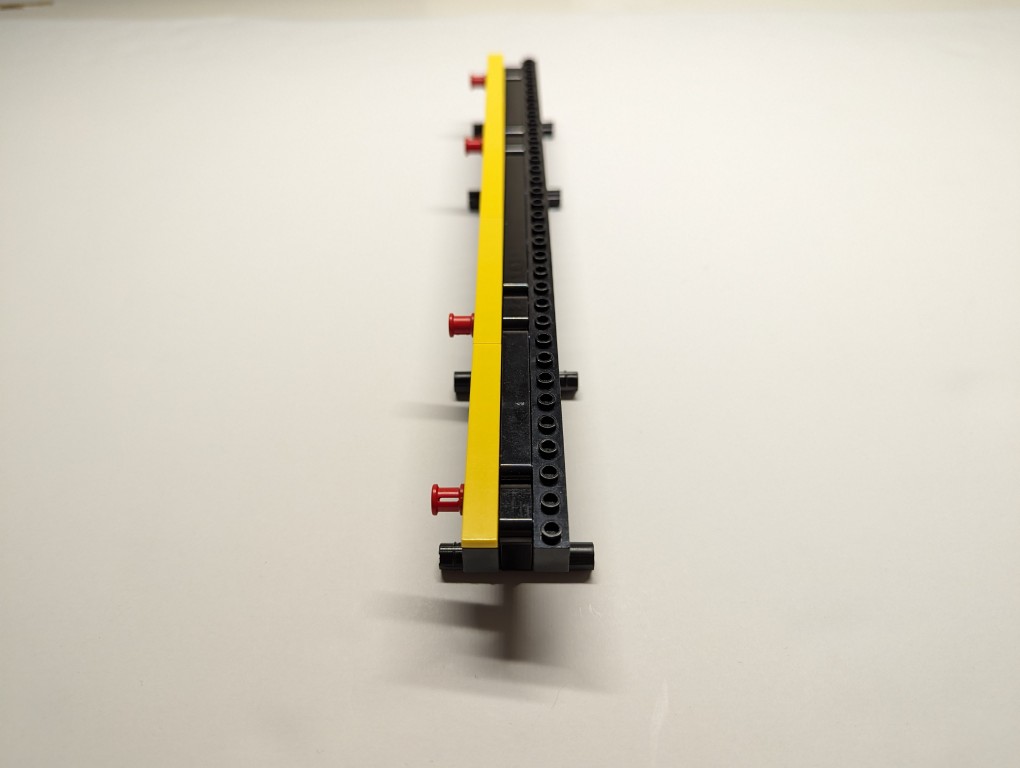

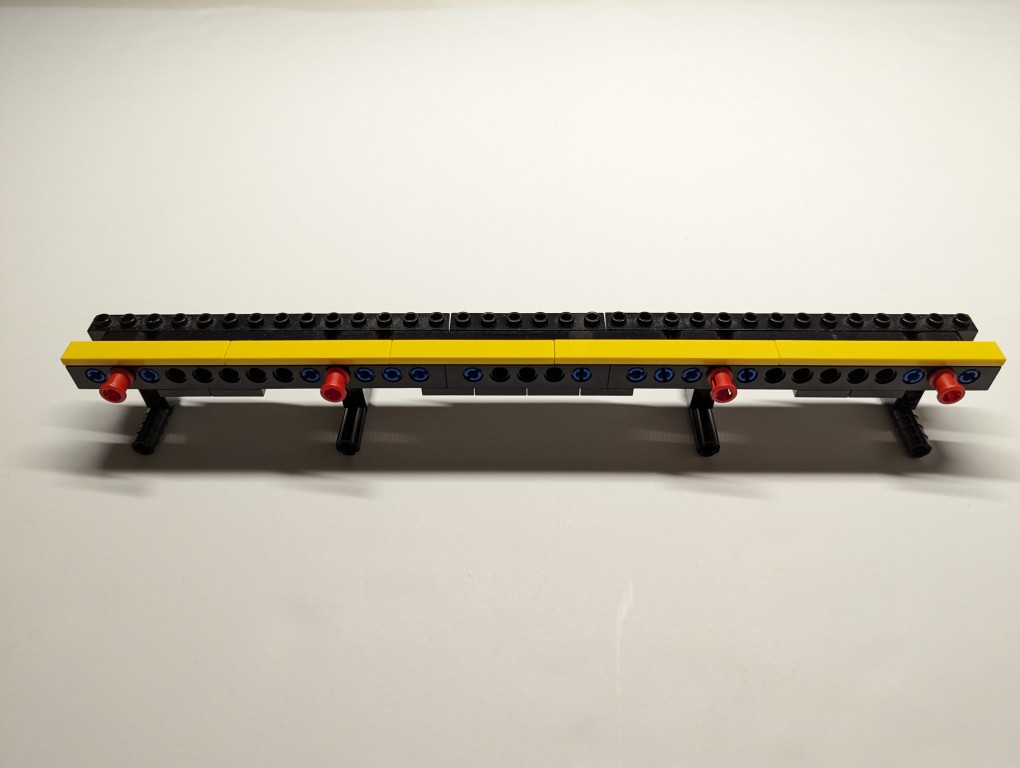

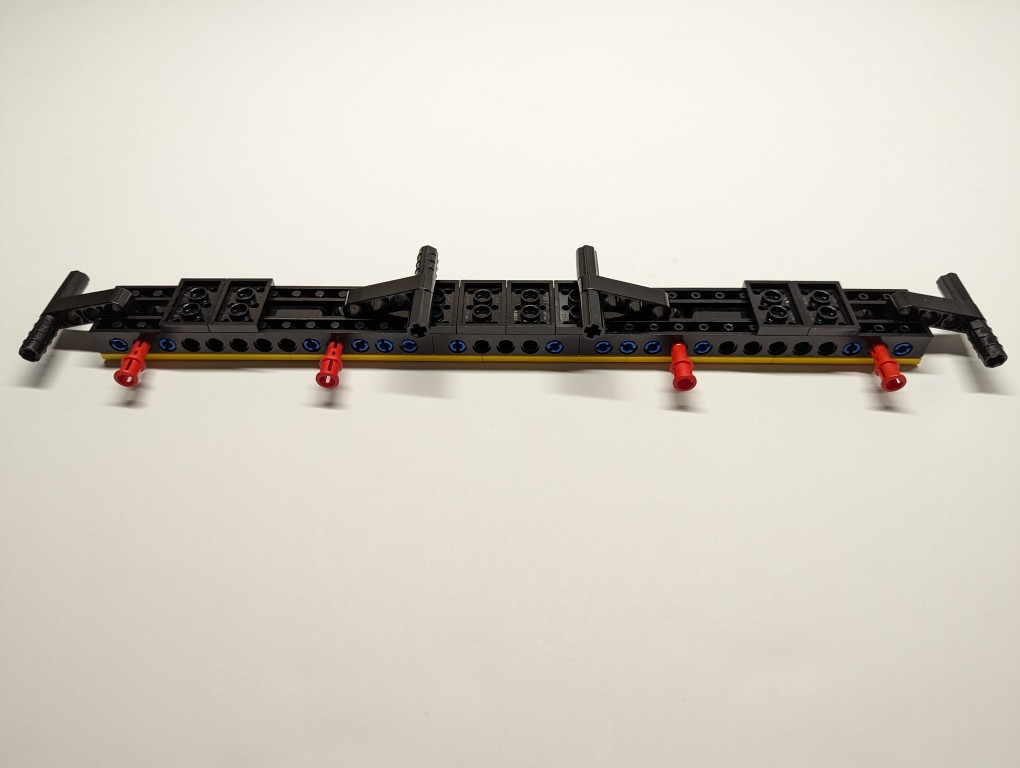

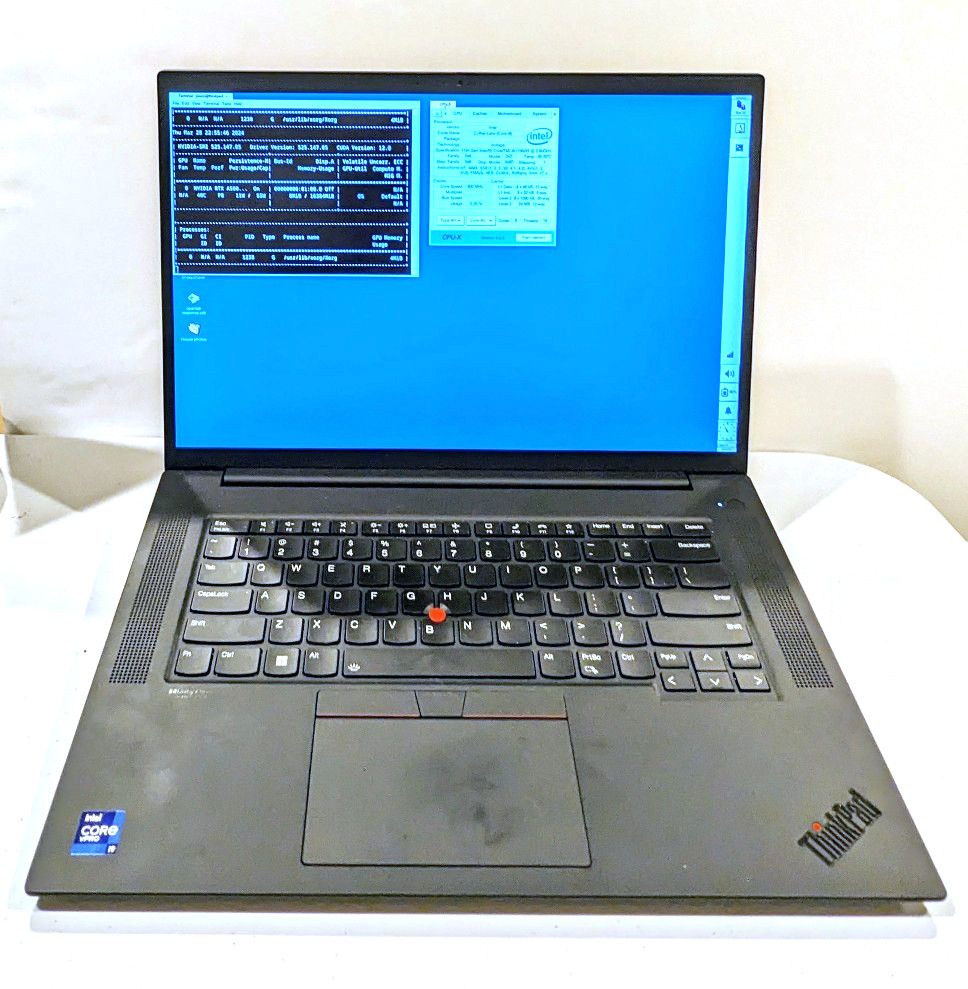

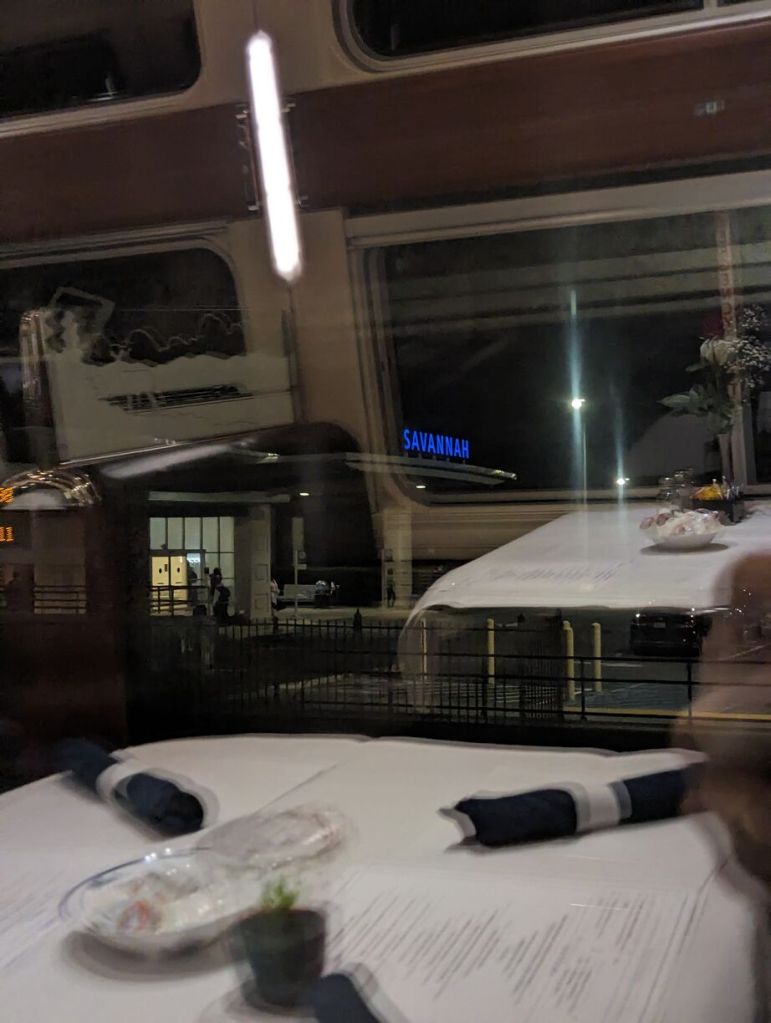

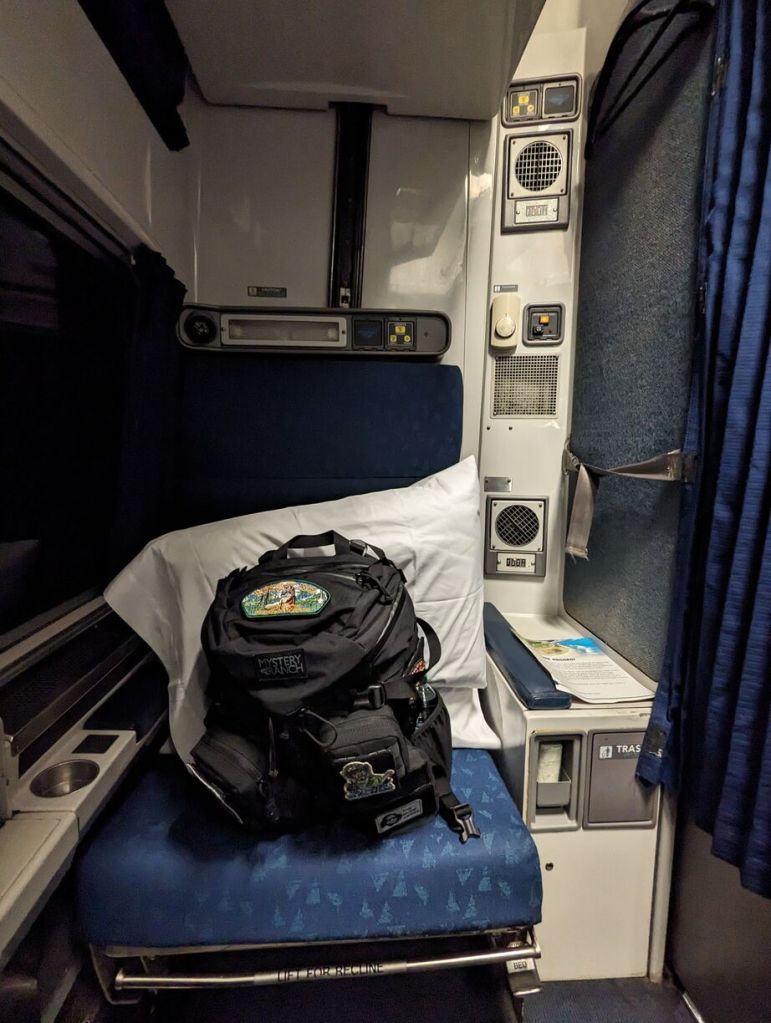

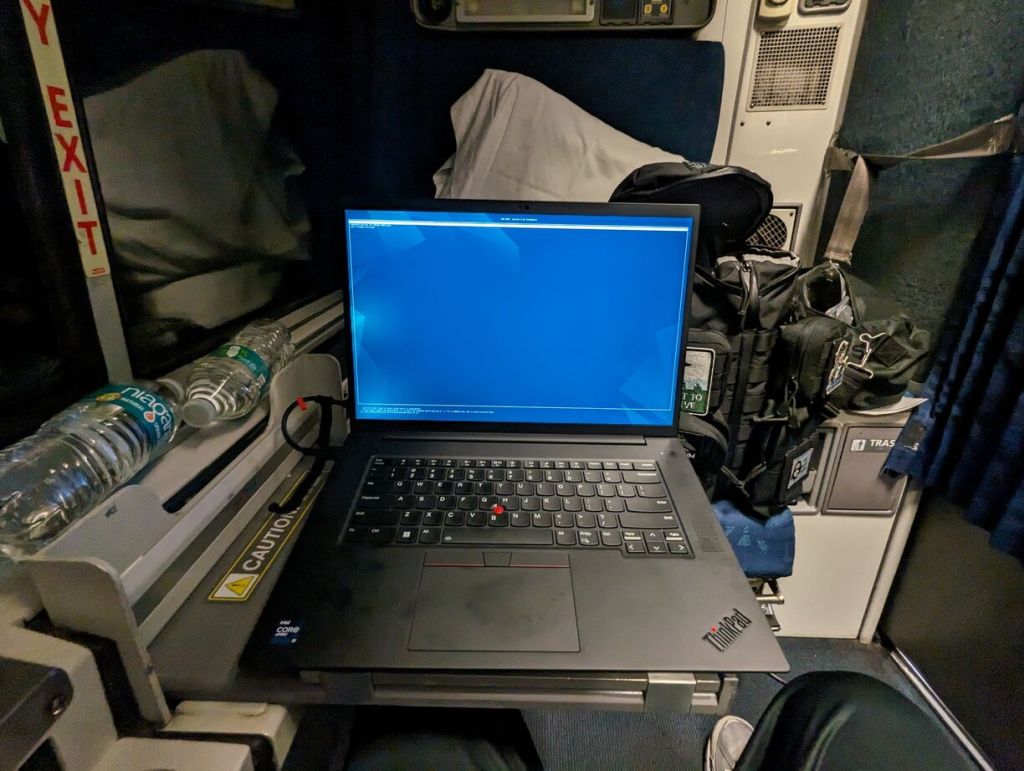

Below are some photos of my trip aboard the Silver Meteor and my roomette. I tried to capture the roomette’s features and amenities as well as demonstrate how much/little leg room there is if you are traveling with another person. Also, this train has a toilet in the roomette–something you would need to negotiate its use if traveling with someone else. Finally, I have some photos of the dining car and the early morning breakfast that I enjoyed (as the dining options are limited, the earlier you go to a meal, the more likely the option you want will still be available).

Savannah Amtrak Station

Sleeping Car

Roomette

Roomette Toilet and Folding Sink

Roomette Interior Door and Window to Hallway

Roomette Legroom

Roomette Folding Table

Roomette Bunk Bed

Dining Car

Passing Through Washington, DC

Passing Train