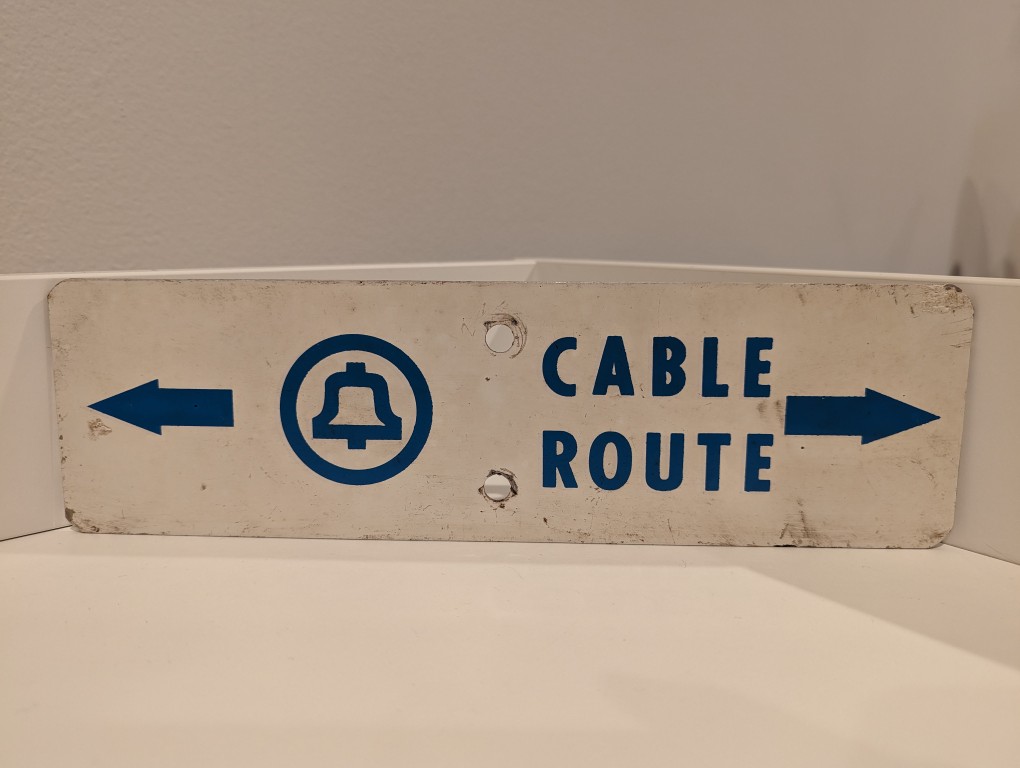

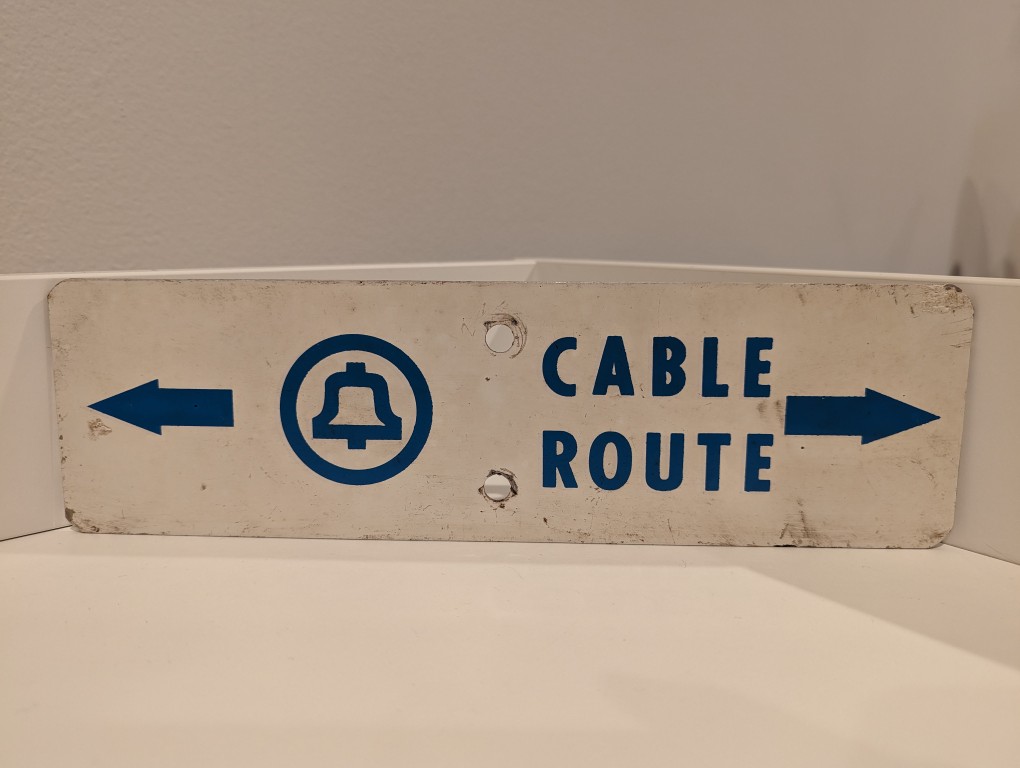

Long before AT&T was having massive data breaches, they were burying cables and putting up these signs in the back country to warn would-be diggers from slicing through buried copper or fiber optic cables.

Long before AT&T was having massive data breaches, they were burying cables and putting up these signs in the back country to warn would-be diggers from slicing through buried copper or fiber optic cables.

One of my personal goals for my sabbatical has been to revitalize dynamicsubspace.net after years of declining viewership. I was happy to see that the pendulum swung back to an increase rising from 18,099 views in 2022 to 19,455 views in 2023.

Looking further back, you can see in the chart above that my site’s views jumped off the proverbial cliff at the beginning of 2013 . There were a few reasons for this. First, I started my first professional employment as a Brittain Fellow at Georgia Tech, which took away time from blogging due to more time spent prepping, teaching, researching, and providing service to the writing program. Second, I deleted most of my social media accounts, which I had been using to publicize my posts automatically and had in turn led many returning visitors to the site. Third, Google changed how it ranked sites and search results (updates to the Panda algorithm and then the upgrade to Hummingbird), which probably had a precipitously negative effect on how many visitors found posts on my site through search.

Some of the things that I’ve been doing to achieve that goal has involved setting a publishing schedule of one new post per day Monday through Friday (resulting in my writing over 53k words across 125 new posts this year), refocusing on sharing a combination of how-to and research-based posts, building out and continuously updating a new bibliography page based on my research on teaching about/with generative AI, and analyzing the site using Google Search Console and making suggested changes (i.e., removing posts with dead links and video).

I did try using some social media to surface my work to new audiences by joining and contributing to online communities. I had the most success with LinkedIn, which I continue to use daily. I had less success with Mastodon, which felt like shouting into the void and yielded no measurable bump to shared content and few genuine connections. I had the least success with Reddit due to frustration with armchair experts and down votes when I pointed out content theft and fake posts in some of the communities I had joined. Always curating my online footprint, I deleted the Mastodon and Reddit accounts.

I plan to keep working on the site in this manner throughout 2024. I hope the writing, images, and video that I share might be interesting and informative to folks who happen to pass by. Of course, you can drop me a line to let me know if you found something useful–my email address is in the info widget to the right.

As I wrote about KPT Bryce in July 2023, there are image generating programs that use fractal geometry instead of artificial intelligence (AI).

Another such image generator program is Evolvotron. It can generate fractal-based images with different patterns, colors, and shapes.

It has an interactive interface driven by user choices in response to a field of different images–each generated from a different set of parameters. Based on this initial field of images, the user can choose File > Reset (or press R on the keyboard) to generate a new field of images.

When one of these images is one that the user likes or would like to explore more, they can right click and choose to Respawn (just the one square), Spawn (generate images with adjacent parameters), Spawn recoloured (same originating parameters but different colors), and other functions such as Spawn warped (below), Enlarge (below), and Save.

Below are a few images at 1024 x 1024 that I generated with Evolvotron.

I installed Evolvotron from the Debian 12 repositories. It can also be downloaded for various Linux distributions here, and it is available for MacOS X via Fink.

I registered DynamicSubspace.net in 2007, but I imported content that I had begun writing in 2006. That means that the site’s content has been growing for about 17 years. I’ve written over 700,000 words across over 1,600 posts, which doesn’t include the pages with bibliographies and other information. The posts were categorized in over 30 categories and 1,300 tags.

Watching the steady decline of site views since the high water mark of 2012 with 91,526 views, I knew that I needed to dig into the site and improve findability of the content for visitors once they land on the site usually from Google and other search engines. The idea behind this is that I have lots of overlapping and related content that might be useful for some visitors. While a search engine points a visitor to one particular post, the visitor might not realize there is related content that they might find useful. So, I want to help surface some of that other info and help visitors use navigation tools built into the site to help them find related content on their own.

The easiest change that I made to my WordPress settings was to enable “Show related content after posts,” which automagically give three related post previews at the bottom of a post’s page and show how it might be related (e.g., via the same category or tag).

The heavier lifts involved sorting tags by how many posts use them, identifying adjacent/overlapping tags, and consolidating those tags across affected posts. This less granular approach to tags makes it easier to find related content and it significantly reduced how many tags my site uses now. It’s more logical and less scattershot. I got the tags down to only 177. The tags appearing on the most posts are listed on the right in the tag cloud widget.

Similarly, I reduced the number of categories and made sure posts were assigned the appropriate ones. There are now 31 categories. All of these categories are listed on in the category cloud on the left–the size of each is determined by the number of posts categorized each way. I haven’t completed this for categories and tags, but it is something that I can work at over time–improving content associations over time.

Another problem identified with the help of Google Search Console was the number of pages that it wouldn’t index due to having dead video links–dead links to YouTube content. This required a little more work to hunt down those pages, but I deleted the dead links and added an update on those pages that the content either no longer existed or was removed due to copyright issues.

While I was digging through those pages with dead video links, it made me reconsider keeping all posts as some of those posts were only links to videos/embedded video. I decided to delete those pages that were only video-focused without any commentary or very little commentary on my part.

Also, I began thinking about posts for long defunct call for papers and application notifications. When I originally started my blog, I envisioned it being a hub for SF Studies info, but it was impractical for me to attempt to keep up and share out CFPs and announcements. It was hard enough posting about the work that I was doing while doing everything else–studying, teaching, working, etc. Since I didn’t reach the level of re-sharing that I had hoped to but there was a significant amount of this kind of content, I decided to delete those posts as they weren’t strongly related to my work and there’s no requirement on the part of my blog to be a historical record of those things. Furthermore, the less relevant posts won’t accidentally surface as a related post on the content that I actually want to help visitors find. Between the defunct video posts and CFPs/announcements, I culled 79 posts leaving 1,543.

One thing that I’m opting not to do is re-enable comments. While I allowing visitors an option to comment on posts and pages to build engagement, it has in my experience been more focused on criticism, negativity, and spam. I give my email in the about widget on the right, so folks are free to contact me that way. Since I instituted that years ago, I can count the number of emails that I’ve received from visitors on one hand, so it seems to be a high enough bar that saves me from dealing with comments that I don’t want to police. Also, it keeps the site focused on the content that I want to publish.

I’ll keep working to improve the overall operation of the site so that it has as much utility for its visitors.

As I wrote about recently about my summertime studying and documented on my generative artificial intelligence (AI) bibliography, I am learning all that I can about AI–how it’s made, how we should critique it, how we can use it, and how we can teach with it. As with any new technology, the more that we know about it, the better equipped we are to master it and debate it in the public sphere. I don’t think that fear and ignorance about a new technology are good positions to take.

I see, like many others do, that AI as an inevitable step forward with how we use and what we can do with computers. However, I don’t think that these technologies should only be under the purview of big companies and their (predominantly) man-child leaders. Having more money and market control does not mean one is a more ethical practitioner with AI. In fact, it seems that some industry leaders are calling for more governmental oversight and regulation not because they have real worries about AI’s future development but instead because they are in a leadership position in the field and likely can shape how the industry is regulated through industry connections with would-be regulators (i.e., the revolving door of industry-government regulation in other regulatory agencies).

Of course, having no money or market control in AI does not mean one is potentially more ethical with AI either. But, ensuring that there are open, transparent, and democratic AI technologies creates the potential for a less skewed playing field. While there’s the potential for abuse of these technologies, having these available to all creates the possibility for many others to use AI for good. Additionally, if we were to keep AI behind locked doors, only those with access (legally or not) will control the technology, and there’s nothing to stop other countries and good/bad actors in those countries from using AI however they see fit–for good or ill.

To play my own small role in studying AI, using generative AI, and teaching about AI, I wanted to build my own machine learning-capable workstation. Before I made any upgrades, I maxed out what I could do with a Asus Dual RTX 3070 8GB graphics card and 64GB of RAM for the past few months. I experimented primarily with Stable Diffusion image generation models using Automatic1111’s stable-diffusion-webui and LLaMA text generation models using Georgi Gerganov’s llama.cpp. An 8GB graphics card like the NVIDIA RTX 3070 provides a lot of horsepower with its 5,888 CUDA cores and memory bandwidth across its on-board memory. Unfortunately, the on-board memory is too small for larger models or adjusting models with multiple LORA and the like. For text generation, you can layer some of the model on the graphic’s card memory and your system’s RAM, but this is inefficient and slow in comparison to having the entire model loaded in the graphics card’s memory. Therefore, a video card with a significant amount of VRAM is a better solution.

For my machine learning focused upgrade, I first swapped out my system RAM for 128GB DDR4-3200 (4 x 32GB Corsair shown above). This allowed me to load 65B parameters into system RAM with my Ryzen 7 5800X 8 core/16 thread CPU to perform the operations. The CPU usage while it is processing tokens on llama.cpp looks like an EEG:

While running inference on the CPU was certainly useful for my initial experimentation and the CPU usage graph looks cool, it was exceedingly slow. Even an 8 core/16 thread CPU is ill-suited for AI inference in part due to how it lacks the massive parallelization of graphics processing units (GPUs) but perhaps more importantly due to the system memory bottleneck, which is only 25.6 GB/s for DDR4-3200 RAM according to Transcend.

Video cards, especially those designed by NVIDIA, provide specialized parallel computing capabilities and enormous memory bandwidth between the GPU and video RAM (VRAM). NVIDIA’s CUDA is a very mature system for parallel processing that has been widely accepted as the gold standard for machine learning (ML) and AI development. CUDA is unfortunately, closed source, but many open source projects have adopted it due to its dominance within the industry.

My primary objective when choosing a new video card was that it had enough VRAM to load a 65B LLaMA model (roughly 48GB). One option for doing this is to install two NVIDIA RTX 3090 or 4090 video cards with each having 24GB of VRAM for a total of 48GB. This would solve my needs for running text generation models, but it would limit how I could use image generation models, which can’t be split between multiple video cards without a significant performance hit (if at all). So, a single card with 48GB of VRAM would be ideal for my use case. Three options that I considered were the Quadro 8000, A40, and RTX A6000 Ampere. The Quadro 8000 used three-generation-old Turing architecture, while the A40 and RTX A6000 used two-generation-old Ampere architecture (the latest Ada architecture was outside of my price range). The Quadro 8000 has memory bandwidth of 672 GB/s while the A40 has 696 GB/s and the A6000 has 768 GB/s. Also, the Quadro 8000 has far fewer CUDA cores than the other two cards: 4,608 vs. 10,572 (A40) and 10,752 (A6000). Considering the specs, the A6000 was the better graphics card, but the A40 was a close second. However, the A40, even found for a discount, would require a DIY forced-blower system, because it is designed to be used in rack mounted servers with their own forced air cooling systems. 3D printed solutions that mate fans to the end of an A40 are available on eBay, or one could rig something DIY. But, for my purposes, I wanted a good card with its own cooling solution and a warranty, so I went with the A6000 shown below.

Another benefit to the A6000 over the gaming performance-oriented 3090 and 4090 graphics cards is that it requires much less power–only 300 watts at load (vs ~360 watts for the 3090 and 450 watts for the 4090). Despite this lower power draw, I only had a generic 700 watt power supply. I wanted to protect my investment in the A6000 and ensure it had all of the power that it needed, so I opted to go with a recognized name brand PSU–a Corsair RM1000x. It’s a modular PSU that can provide up to 1,000 watts to the system (it only provides what it is needed–it isn’t using 1000 watts constantly). You can see the A6000 and Corsair PSU installed in my system below.

Now, instead of waiting for 15-30 minutes for a response to a long prompt ran on my CPU and system RAM, it takes mere seconds to load the model on the A6000’s VRAM and generate a response as shown in the screenshot below of oobabooga’s text-generation-webui using the Guanaco-65B model quantized by TheBloke to provide definitions of science fiction for three different audiences. The tool running in the terminal in the lower right corner is NVIDIA’s System Management Interface, which can be opened by running “nvidia-smi -l 1”.

I’m learning the programming language Python now so that I can better understand the underlying code for how many of these tools and AI algorithms work. If you are interested in getting involved in generative AI technology, I recently wrote about LinkedIn Learning as a good place to get started, but you can also check out the resources in my generative AI bibliography.